Workshop description

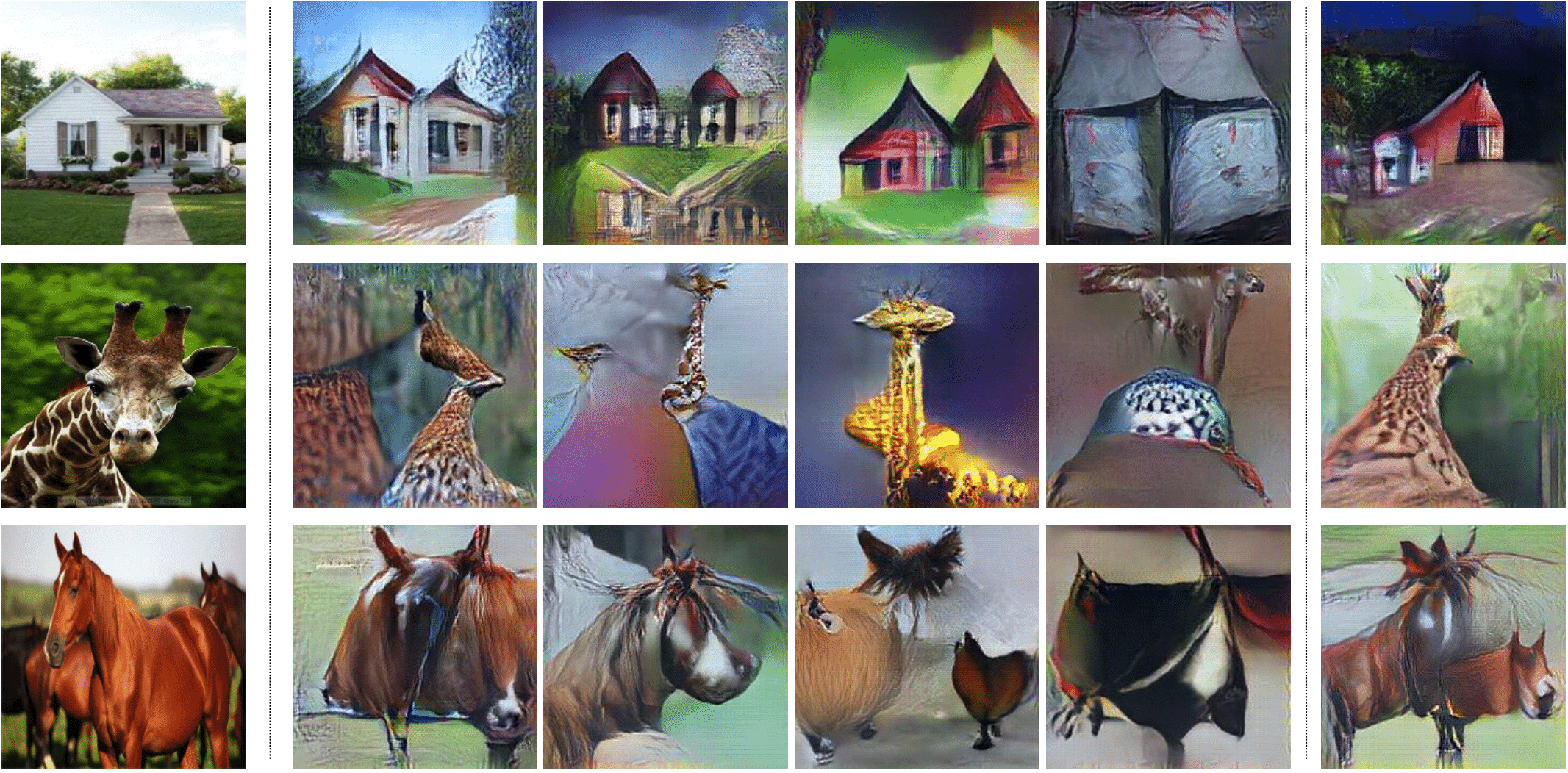

The real challenge for any machine learning system is to be reliable and robust in any situation, even if it is different compared to training conditions. Existing general purpose approaches to domain generalization (DG) — a problem setting that challenges a model to generalize well to data outside the distribution sampled at training time — have failed to consistently outperform standard empirical risk minimization baselines. In this workshop, we aim to work towards answering a single question: what do we need for successful domain generalization? We conjecture that additional information of some form is required for a general purpose learning methods to be successful in the DG setting. The purpose of this workshop is to identify possible sources of such information, and demonstrate how these extra sources of data can be leveraged to construct models that are robust to distribution shift. Specific topics of interest include, but are not limited to:

- Leveraging domain-level meta-data

- Exploiting multiple modalities to achieve robustness to distribution shift

- Frameworks for specifying known invariances/domain knowledge

- Causal modeling and how it can be robust to distribution shift

- Empirical analysis of existing domain generalization methods and their underlying assumptions

- Theoretical investigations into the domain generalization problem and potential solutions

Information for the day of the workshop

The workshop will be streamed via the ICLR workshop page.

Instructions for presenters:

- Please ensure your presentation will fit in the allowed time—we will be strict about enforcing time limits.

- All questions will be asked via the rocket chat. For invited and long oral speakers, the session chair will read these out and you will answer over Zoom. In the interests of time, those presenting in the spotlight session will answer questions in the rocket chat while we are changing speakers.

- Please ensure that you have updated Zoom to the most recent version, and tested that it works.

- Join the Zoom call at least 20 minutes prior to the session you will be presenting in. Check the schedule here.

Important dates

DG Workshop at ICLR2023

Submission deadline: February 3, 2023 at 12:00 AM UTC (anywhere on earth) via OpenReview

Author notifications: March 3, 2023

Meeting: May 5, 2023